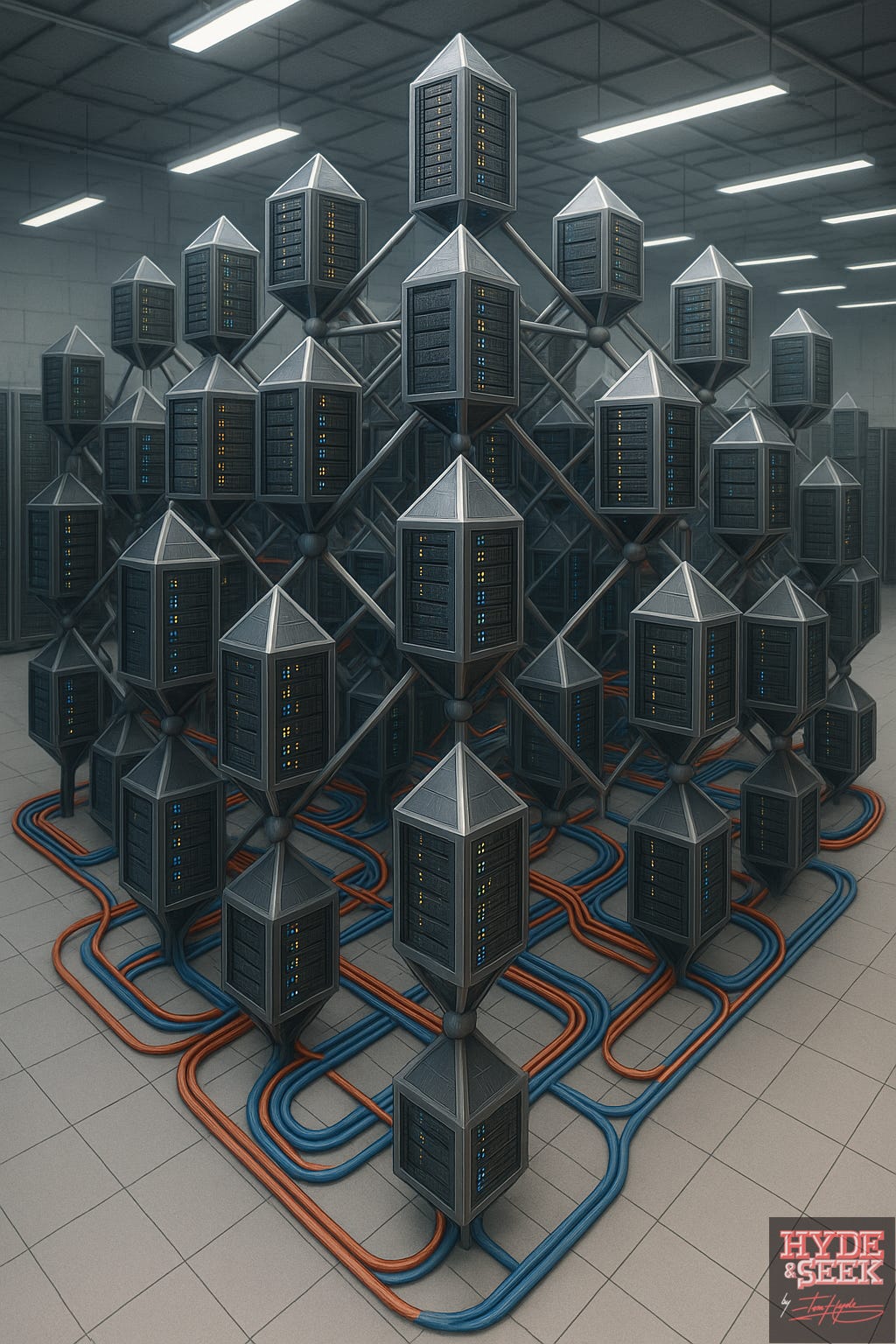

The Diamond-Standard of Server Architecture: A crystalline concept that calls time on the idea of racks

Modelling crystalline lattice structures to conceptualize a new mathematically-derived server layout architecture - delivering a 28.6% reduction in cooling energy requirements and 60% less cabling

The repercussions of exponential data growth is starting to collide with the physical and thermal limits of traditional infrastructure. As with many of the challenges with the current development of data center design, there's an increasingly large dependence on other professions and infrastrcture providers to either innovate or implement the required solutions.

Whilst there could be an argument to simply wait for these developments, there's as much an argument to attempt to innovate around them. I've explored the idea of a novel wafer server design to overcome the inherent inefficiencies of architecturing servers into a conventional rack, but there's another provocative approach we could attempt.

This article introduces a provocative new approach: restructuring data center server layouts into three-dimensional lattice frameworks, inspired by atomic geometries found in crystalline solids.

This concept isn’t just aesthetic; it suggests a reshape of thermal dynamics within the data center; refines network topologies and space optimization; and can be mathematically derived and optimized.

The outcome of the initial workings shows incredible promise - indicating 30% in energy savings and power requirements, with less latency and hardware; whilst maintaining the same compute capacity.

A diamond in the rough: the crystalline data center design

Rather than arranging servers in flat rows or vertical stacks, conceptualize them suspended in a 3D geometric lattice - such as a face-centered cubic (FCC) or diamond cubic structure. Each node (server) occupies a mathematically optimized “atom-like” position.

Key characteristics:

Uniform distribution - Servers are evenly spaced in all directions, preventing hotspots.

Atomic-level precision - Server placement based on lattice constants, akin to atomic spacing in solid-state physics. Algorithmic control of each server’s position to balance thermal load, compute density, and cabling length.

Tunneling airflow paths - Air circulates omnidirectionally—natural convection is enhanced due to the geometric openness.

Optimized cabling - Shorter, modular interconnections via lattice-aligned optical pathways.

Further Refinements and Technologies could be integrated for Non-invasive Diagnostics - such as Acoustic Resonance Monitoring. This will be briefly explored later as a mechanism.

For this use case, it must be considered that there shoudl be some consideration towards a more suitable material; I've already outlined that much of the material selection in current server hardware is not hugely optimized for it's use case; and much of the ancillary power consumption and cooling is simply overcoming the design flaws as a result of using low-quality materials.

To support this design physically and thermally, the first choice would be a Carbon Fiber-Infused Aluminum Alloy;

Strength-to-weight ratio allows vertical expansion. Important for designs that will have to support loads of other adjoining racks.

Thermal conductivity ensures heat dispersion across struts.

EM shielding properties reduce cross-talk in dense configurations.

Alternatives could include a titanium honeycomb mesh (lightweight, high thermal resistance); an Aerogel-supported steel scaffolding (Excellent insulation but likely a high cost) or 3D-printed polymer-metal hybrids (Customizable with greater degree of design geometry, but still ultimately limited by load-bearing capability).

Down to the Maths: A Proposed Optimization Formula for Network & Thermal Efficiency

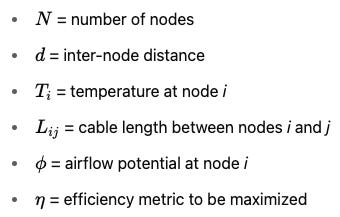

Let:

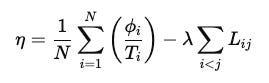

Suggested Optimization Function:

Where:

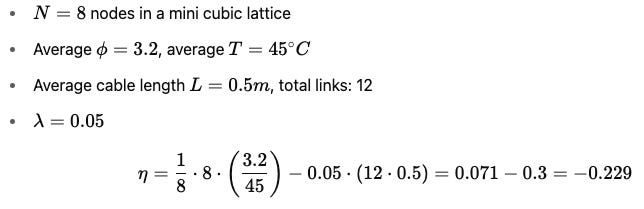

The first term encourages high airflow and low temperature.

The second penalizes long cable runs.

λ is a tunable constant to balance between thermal and network priorities.

Worked Example

Benefits

1. Thermal Efficiency

Natural convection paths reduce fan load; distributed heat zones eliminate hotspots.

2. Reduced Network Bottlenecks

Peer-to-peer mesh topology reduced network bottlenecks through hardware such as switches; also results in shorter average cable lengths, minimizing latency.

3. Space Utilization

Vertical stacking within spherical or hexagonal enclosures, utilizes more available eaves height; could reduce footprint of overall architecture by up to 40%.

4. Modular Scalability

Easy expansion by adding nodes in lattice-compatible vectors.

5. Advanced Monitoring

Acoustic diagnostics catch issues early with minimal disruption.

6. Architectural Freedom

Could remove the need for traditional top-of-rack switches in favor of direct optical interlinks.

Drawbacks & Challenges

1. Maintenance Complexity

Replacing a faulty node inside a dense lattice is labor-intensive. Potentially achievable with robotic assistance to extract servers. Failsafe modular access system may be required; but overall design ensures that issues are not as critical, as traffic is easily routed around any fault.

2. Cooling Design Overhaul

Traditional Computer Room Air Conditioning systems aren’t optimized for spherical or cubic layouts; and therefore would require custom airflow engineering or possibly immersion cooling integration.

3. Power Delivery

Complex internal routing needed for power cabling. There's a chance that high-density arrangements may induce parasitic losses (defined as any energy consumed or lost by components of the system that do not directly contribute to the net output or desired function) - unless carefully managed.

4. Higher Initial Cost

Custom materials and design engineering increase upfront costs; but modularity ultimately will quickly reduce manufacturing costs, owing to repeatability of design with universal applicability.

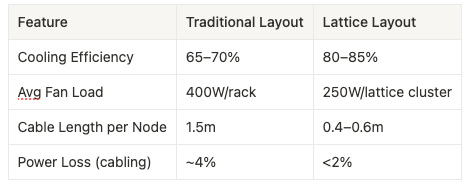

Putting it all together: The bottom line on cooling performance & power demand

Cooling Load Example:

Traditional 1MW data center = 350kW cooling load

Lattice model (same compute) = ~250kW

Assuming these figures, this results in a potential 28.6% reduction in cooling energy requirements over a traditional layout for the equivalent compute capacity, and over 60% less cabling per node.

Could this replace traditional architecture?

Probably not yet; the design concepts are strong enough, but they'll need to be tested and refined. There's plenty of angles for solutions, but this will be dependent on equivalent development in the cooling methodology and power distribution. Not all existing infrastrcture could be converted to this architecture readily, but new developments could incorporate it much more easily.

The lattice model would likely not negate network switches entirely, but may reduce switch hops and minimize intra-cluster switching load—especially with integrated optical mesh backbones. Ultimately the mesh configuration assists in a more dynamic and efficient routing of traffic around the system, and there's less chances of hardware such as network switches to act as bottlenecks.

Additional innovations: Acoustic resonance monitoring for non-invasive diagnostics.

In crystalline materials, phonons - the quantized vibrations of atoms - carry both heat and sound through the structure. By drawing inspiration from this principle, acoustic resonance monitoring in a lattice-structured data center could unlock continuous, non-contact diagnostics of physical and thermal states.

How It Works

Each server node in the lattice acts as both a vibration source and a resonance receptor. By embedding small, low-power ultrasonic transducers and microelectromechanical system (MEMS) microphones into the structural struts or adjacent to the server housings, a distributed acoustic field could be created across the lattice.

Servers or embedded emitters periodically emit controlled sound pulses in the 15–40 kHz range. These propagate through the air and frame, reflecting and resonating in predictable patterns. The resulting signals are captured by strategically placed receivers.

Sensor & Software Integration

Sensors: Piezoelectric Ultrasonic Transducers, MEMS Microphones; or Laser Doppler Vibrometers for advanced surface vibration detection

Monitoring Software: Real-time DSP Engine for Fast Fourier Transform (FFT) analysis, resonance fingerprinting and anomaly detection via AI pattern matching

On-Premises Orchestration Software: Historical acoustic signature logging, heatmap generation (correlation with thermal, power, or vibration metrics), with an API integration with data center infrastructure management platforms.

Example Use Case: Fan Degradation Detection

Under normal operation, fan A emits a characteristic acoustic fingerprint (stable frequency peaks like somewhere between around 16.7 kHz and 19.4 kHz from research).

Over time, bearing wear subtly shifts the frequency profile.

MEMS sensors detect this deviation before physical failure, long before a temperature spike is observed.

System flags early maintenance opportunity in data center infrastructure management dashboard.

Other Applications:

Detecting cable tension anomalies (indicative of movement or thermal expansion)

Structural resonance shifts caused by overheating nodes

Cross-link correlation to detect air blockage or changes in airflow pathways

Benefits of Acoustic Monitoring in Lattice Architecture

Non-intrusive - No physical contact or internal node access required

Continuous - Always-on background diagnostics with negligible power draw

Predictive - Enables proactive maintenance scheduling and thermal rebalancing

Complementary - Works alongside thermal sensors and telemetry to cross-validate anomalies

Cost-effective - Uses inexpensive, scalable components

Utilizing such data for management efficiency

Dynamic load shifting - If resonance shifts correlate with mechanical strain or cooling inefficiency, workloads can be shifted automatically to cooler or more stable zones.

Cooling strategy adaptation - Resonance data highlights air flow stagnation or vibration-induced inefficiencies, triggering fan ramp-ups or duct reconfiguration.

Infrastructure aging models - Long-term signal degradation is used to predict structural fatigue or material wear, prompting modular replacements before failures.

This lattice approach draws from physics, material science, and systems engineering to break away from the legacy flat-pack model. While challenges evidently remain, the potential for radical energy and space efficiency gains, coupled with improved fault detection and modularity, make it a compelling principle that should be considered further.

TH

You may also find these of interest: