AWS is perfectly positioned for a hostile takeover of conventional data centers in a way that no one could predict

AWS's Snow Family was created for data migration - but it's modularity is a baby step away from a bigger opportunity hiding in plain sight for them.

With the covers coming off in 2015, Amazon Web Services released the Snow family - a series of physical devices designed to remove some of the friction in migrating traditional users from local storage into cloud computing.

It was quite a simple premise. Their research suggested that many continued with local data storage as a result of the challenges and difficulty in migrating data over to the cloud, requiring long uploads through slow internet connections, large amounts of data, or lack of staff, knowledge, time, or resources.

Their solution was a series of devices that were shipped to the customer. Data could be transferred onto the device physically through high-speed networking or high-speed transfer cables before being shipped back to Amazon. Data was then processed by AWS employees, and could be transferred into data center servers ready to be accessed by the customer in the cloud.

The project continues after ten years of operation, though it has been noted that demand has continued to slow. The customer base dwindles, as a result of many already migrating into cloud computing; the Snow data migration method is a one-time sale opportunity - and in the last 10 years, internet speeds have increased sufficiently that some have simply managed to upload their data themselves also.

Whilst the small, portable devices such as the Snowball remain part of the offering, their largest device - the snowmobile - was retired around April 2024. It has been rumoured that it was never actually used, apart from moving some of Amazon’s own data between it’s servers in 2017. Even if that’s the case, it provided quite the marketing spectacle when an 18-wheeler was driven on stage during the announcement in 2016 - and probably did more to generate sales from free advertising from the world’s press that it’s redundancy never particularly mattered.

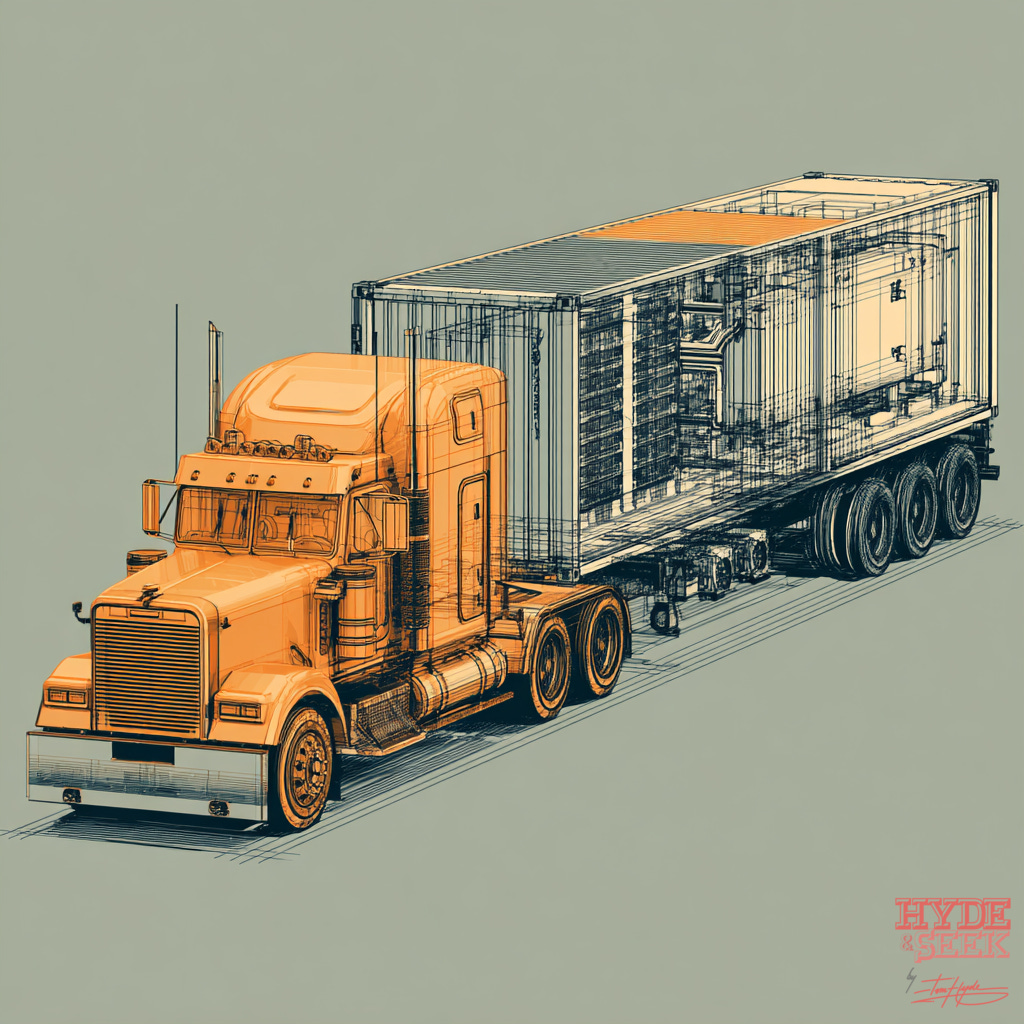

Whilst the snowmobile has been retired, there could be a bigger opportunity for it to have a second life. Not as a data migration tool; but maybe as the data center itself.

The holy grail for nearly all manufacturing is modularity - enabling infinite expansion quickly and easily - uniform, linear scaling to adjust to growing demand. It’s precisely the reason that shipping containers find themselves being used for a myriad of uses other than simply getting stuff from A to B - standardized size, weight and specification. Stackable, durable and compatible in a variety of applications.

I’m suggesting the snowmobile could form as the basis for the first modular approach for a datacenter. Instead of migration hardware - it’s fitted out with the server racks, cooling systems and power connectivity it needs to run already; and additional units can be stacked and linked in series or parallel to scale as required. You could end up with a data center of 1 shipping container, of 50 or more depending on your requirements.

There’s far more focus on permanent conventional data center construction methodologies than there is for a modular approach; but the reality is that progress in expansion is rather hamstrung for the time being.

The hype is bigger than the ability to hyperscale

If there is a data center buzzword in 2025, it is hyperscale. Hyperscale is a lovely idea in theory, but for a few reasons does not necessarily work in practice for every application, in every site, in every location, at all times.

The hyperscale dream is often just that; in an ideal world hyperscale would be rolled out as the new standard for all data center construction. However, the infrastructure required to operate a hyperscale data center significantly lags behind the technological ability to implement it:

Archaic power grid infrastructure often dating back to close to the 1950s

Remote areas that have poor, or little to no connectivity - power, data or both.

And finally, areas with significant water shortage excessively warm climates/drought conditions can often pose far greater problems.

The rollout of hyperscale data centers is impacted far more on the above, rather than the operator's ability to build a bigger building with more servers in it.

Hyperscale installations are a solution, but not a universal one.

AI’s hungry for hyperscale only

The sheer compute and infrastructure requirements to run an array of equipment for the purposes of even a basic AI system far exceeds the capacity of traditional compute data centers. This is to suggest - if a hyperscale installation is being pursued, it’s nearly certainly for AI purposes - as it can’t be deployed anywhere else.

However, this doesn’t automatically mean that the demand for traditional and conventional cloud and compute data centers is any lesser, or even static. The requirements for storage appears to continue to increase - and will effectively continue to do so as proportional to the reliance and development we invest into the digital economy.

Addressing the infrastructure bottleneck, and why it’s the anchor to real progress

Demand for all data centers are going to continue to increase exponentially - as a result of the rise and advent of AI, as well as a development of all the additional new digital content being produced. The demand exists for construction of many new data centers, but it will have to be accepted that not all of those can be hyperscale. Some of those can't even be medium scale; and in some cases, that may even have to be the smallest of scales - as a result of the bottlenecks created by the archaic and underinvested infrastructure.

The speed of which infrastructure can be invested in and improved is relatively slow and often dependent on public money raised by tax systems in order to fund it. This places a limit on the amount that can be upgraded at any one time, in addition to the bottleneck of skill sets required to implement such huge projects globally.

Therefore, the reality is that not all data centers can be constructed at the hyperscale level instantaneously; but we should continue as much uniform rollout of conventional facilities as much as practicable. The modular approach is probably the wisest one, whereby small-scale data centers can begin to be rolled out with expansion and capacity slowly increasing as infrastructure is upgraded and invested in over time.

The modular elevator pitch

An entire post could be written on the design cues with regards to the specification for a modular data center unit equivalent in size to that that could be installed within a shipping container. Here are the key arguments:

A tried and tested, proven, and durable outer shell capable of withstanding most environments they are subjected to in a large proportion of the world with relatively temperate climates.

Sufficient room for the storage of a decent amount of compute power

Sufficient additional space for a hybrid cooling setup .Predominant reliance on glycol-based direct-to-chip cooling. And stealing Microsoft's homework from Project Natick through filling the sealed chambers with nitrogen at higher pressure in order to create a stable and thermally efficient environment for equipment to operate in.

A simplified manufacturing procedure capable of being constructed on a production line much akin to traditional manufacturing systems. Utilizing nearly all standardized parts available off the shelf readily.

Easily and rapidly deployed. Often classed as temporary structures, potentially, in which case would not attract the same amount of regulation, planning, or permit requirements that a more permanent installation would attract - even as a temporary stop-gap until all such infrastructure is invested in.

Of course, infinitely scalable - with units being able to be adaptable to the site and deployed in series to meet demand.

Why AWS over the other operators?

The model is capable of being adopted by any of the major operators. But I’ll state the obvious - AWS is part of Amazon. I’m aware there’s Google, Microsoft, Apple, Meta and many other wealthy players with the funds to explore this idea. But only Amazon has the customer base and the expertise to deploy this with relatively little friction:

An already very established and rapidly expanding logistics business, capable of easily integrating shipping container distribution.

Significant experience in manufacturing and physical product development in-house.

The full existing skillset to integrate the hardware into the AWS platform from many remote sites.

Ability to deploy next generation connectivity, through satellite communications - building on SpaceX’s Starlink concept and implemented through Blue Origin.

Millions of square feet of globally distributed industrial real estate, capable of housing installations permanently or temporarily, with much of the bare minimum of existing infrastructure present to run the installations already - providing an effective turnkey solution to deployment.

The combination of the above means that if Amazon proceed with this approach with a little effort, they would be largely untouchable - as no other competitor has this level of resource available to them; and this is a relatively easy vertical integration for them.

Owing to this factor, the remaining larger competitors will be doubling down on AI infrastructure, as their skillsets and resources are far better aligned with the development and deployment of software and other digital tools. This leaves a ripe opportunity for Amazon to corner the compute data center market - and after making significant progress, there’ll likely be a ripened opportunity to acquire some of the smaller operators in this space also to secure more real estate infrastructure.

If this premise holds any truth, it could spell equal parts of promise and problem. A improved infrastructure which can expand organically over time will only serve all of us better - but there stands potential for a monopoly to be created in the cloud computing space if it’s not monitored sensitively.

Modular data centers - as a concept - is a robust solution. However, as with all modern developments in technology, but must ensure that it’s entrusted and implemented within a free market in order to protect the wider population.

TH

You may also find these of interest: